What is Encoding in Machine Learning?

“In life, everything is about translation—sometimes between languages, other times between thoughts and actions. In machine learning, we’re constantly translating too—only here, we’re turning categories into numbers.”

When you’re working with machine learning models, one thing you’ll come across often is categorical data—you know, things like colors, job titles, or even types of cuisine. The problem? Your algorithm doesn’t speak ‘Red’ or ‘Italian’; it speaks numbers. This is where encoding comes in.

Encoding is simply about converting these categorical values into a numerical format that your machine learning model can understand and process. Without it, you’d be left with models scratching their heads (metaphorically, of course) trying to figure out what to do with all that text data.

Now, here’s the deal: There are two primary encoding methods—One-Hot Encoding and Dummy Encoding. Both are like translators with slightly different dialects. While they serve a similar purpose, each has its own quirks that can affect how your model performs and how efficient it is with resources.

When Does This Matter?

You might be wondering, why is this translation so important?

Well, when you’re using algorithms like regression models, decision trees, or even complex neural networks, the way you encode your data can dramatically affect both the performance and interpretability of your model. For instance, in linear regression, poor encoding could lead to multicollinearity issues, making your model weaker and less reliable.

But here’s something interesting: Not all encoding techniques are created equal across models. Some work beautifully with tree-based methods, while others shine in deep learning setups. We’ll dive into those nuances, but remember—how you translate your data into numbers could make or break your model’s success.

2. Understanding One-Hot Encoding

What is One-Hot Encoding?

Now, let’s break down One-Hot Encoding. This method is the machine learning equivalent of giving each category its own VIP pass. Imagine you have a dataset with different categories like colors—Red, Blue, and Green.

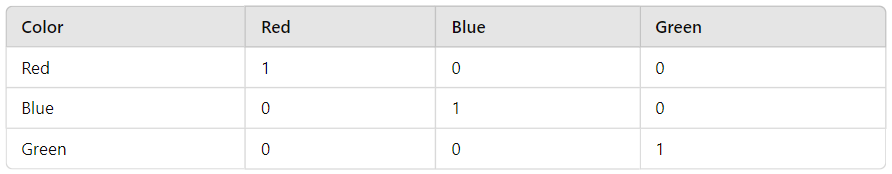

Here’s how it works: One-Hot Encoding creates a separate binary column for each category. So, in our case, it would generate three new columns, one for each color. If a row’s category is Red, the column for Red gets a 1, and the columns for Blue and Green get a 0. Pretty simple, right?

It’s a straightforward approach that ensures no overlap between categories, giving each one its own unique representation.

Detailed Example:

Let’s say you’re working with a dataset of colors. Here’s what One-Hot Encoding would do:

Each color gets its own column, and only one column will have a 1 for any given row.

Advantages of One-Hot Encoding:

Now, why would you choose One-Hot Encoding?

- Non-collinear representation: One of the big wins here is that One-Hot Encoding avoids multicollinearity. There’s no overlap between the categories, so you don’t have to worry about one category being linearly dependent on another, which is a huge advantage when you’re working with models like logistic regression.

- Easy interpretation: If you’re using algorithms like decision trees or neural networks, One-Hot Encoding is a dream. It gives clear, distinct inputs for each category, which makes it easier for these models to identify patterns.

Drawbacks of One-Hot Encoding:

But let’s not get carried away—One-Hot Encoding has its pitfalls:

- High Dimensionality: If you’re working with a feature that has, say, 100 categories, One-Hot Encoding would create 100 new columns. You can see how this can quickly get out of hand, especially in datasets with high-cardinality categorical features. More columns mean more computational power and potentially more noise for your model to sift through.

- Sparse Matrix: Once One-Hot Encoding creates all these new columns, you’re left with mostly

0s and very few1s, creating what’s called a sparse matrix. Sparse matrices can be tricky for models that rely on dense inputs, leading to increased memory usage and slower training times.

So, what’s the takeaway? One-Hot Encoding is great for models that don’t care about multicollinearity and can handle high-dimensional data, but it’s not always the best choice if you’re working with a feature that has a lot of categories.

One-Hot Encoding vs. Dummy Encoding: Key Differences

Core Difference

“In the world of encoding, it’s not just about converting words to numbers; it’s about how you choose to tell the story.”

When it comes to One-Hot Encoding and Dummy Encoding, the heart of the matter lies in how each method handles categories. Here’s the deal: One-Hot Encoding keeps all categories intact, creating a separate binary column for each. On the flip side, Dummy Encoding takes a more streamlined approach by dropping one category, often the first, to avoid what’s known as the “dummy variable trap.” This is crucial because keeping all categories can lead to redundancy and unnecessary complexity in certain models.

Dimensionality

You might be wondering, how does this difference affect dimensionality?

In practice, if you’re dealing with high-cardinality features (think hundreds of unique categories), One-Hot Encoding can inflate your dataset significantly. For instance, if you have a feature like “City” with 100 unique entries, you’ll end up with 100 new columns. This high dimensionality can complicate your model and increase the risk of overfitting.

In contrast, Dummy Encoding, which drops one category, results in fewer columns. So, in the same example, instead of creating 100 columns, you’ll only create 99. This reduced dimensionality can lead to a more manageable model complexity, making it easier for algorithms to learn and generalize.

Model-Specific Considerations

Now, let’s dive into how each encoding interacts with specific models:

- Regression Models:

- When you’re using linear regression or any model sensitive to multicollinearity, Dummy Encoding is your best friend. By dropping one category, you sidestep the issue of linear dependence between your categorical variables. This helps in producing more reliable coefficient estimates.

- Tree-Based Models & Neural Networks:

- Here’s where things get interesting. Tree-based models, like Random Forests or Gradient Boosting Machines, aren’t impacted by multicollinearity. They can effectively use One-Hot Encoding without any hiccups. The same goes for neural networks, which can leverage the full representation of categories to capture intricate patterns.

Model Interpretability

You might find it fascinating how encoding affects interpretability too.

- With Dummy Encoding, your coefficients in a linear model (like logistic regression) can be more straightforward to interpret. Since one category is omitted, it serves as a baseline, making it easier to understand the effect of the other categories relative to that baseline.

- On the other hand, One-Hot Encoding can be a bit more opaque, especially in complex models like deep learning. Here, the sheer number of binary features can lead to a loss of clarity regarding how each input is contributing to the predictions.

Impact on Model Performance

Finally, let’s discuss performance. You may find it surprising, but the choice of encoding can lead to notable differences in training times and model performance:

- One-Hot Encoding can speed up training in models that thrive on rich, high-dimensional input, like neural networks, as long as your hardware can handle it. However, if your feature set becomes too large, training can slow to a crawl, especially in large datasets.

- Dummy Encoding often leads to faster training times and better performance in regression models due to reduced dimensionality. By avoiding the pitfalls of multicollinearity, your model can generalize better and perform well on unseen data.

When to Use One-Hot Encoding vs. Dummy Encoding

Guidelines for Use

Now, let’s get practical. Here are some general rules to help you decide when to use one encoding over the other:

- Use One-Hot Encoding when you have:

- A manageable number of categories (typically less than 10-15).

- Models that benefit from rich feature representations (e.g., neural networks).

- Opt for Dummy Encoding when:

- You’re dealing with a high-cardinality feature.

- You’re using regression models where multicollinearity is a concern.

Algorithm Compatibility

Understanding which algorithms work better with each encoding is crucial.

- Regression Models: Generally, these benefit more from Dummy Encoding due to the multicollinearity issue.

- Tree-Based Methods: These are flexible; both One-Hot and Dummy Encoding work well, but One-Hot can capture more nuances.

- Deep Learning: One-Hot Encoding is typically preferred, as neural networks can effectively learn from a larger number of features.

Practical Examples

To bring this all together, let’s look at some real-world scenarios:

- Logistic Regression: If you’re predicting whether a customer will buy a product based on their location (e.g., Urban, Suburban, Rural), Dummy Encoding can simplify interpretation and improve reliability.

- Deep Learning for Image Classification: In this case, you might have a dataset where you classify images into various categories, like animals (Cat, Dog, Bird). Here, One-Hot Encoding will give your model the rich feature set it needs to distinguish between them effectively.

Conclusion

To recap:

- One-Hot Encoding shines when dealing with models that thrive on rich, high-dimensional data, such as neural networks and tree-based methods. Its ability to create distinct columns for each category allows these models to capture intricate patterns, making it a powerful choice when the dimensionality is manageable.

- Dummy Encoding, on the other hand, is your go-to method for regression models, especially when you’re concerned about multicollinearity. By dropping one category, you simplify the model and enhance interpretability, all while keeping a tighter rein on dimensionality.

You might be wondering, how do you decide which encoding to use? Always consider the context of your problem, the algorithms at play, and the characteristics of your dataset.

Remember, every dataset tells a story, and how you choose to encode your categorical variables shapes that narrative. By making informed decisions about encoding, you’re not just preparing data; you’re setting the stage for the success of your entire model.

So, as you embark on your data science journey, keep these insights in mind. Your choice of encoding could very well be the difference between a model that merely performs and one that truly excels. Go forth and encode wisely!