In the ever-evolving world of AI, it feels like every day brings a new tool or framework designed to help us build more intelligent systems. Whether you’re a seasoned data scientist or someone just getting their hands dirty with AI development, you’ve likely seen the increasing importance of large language models (LLMs) in powering real-world applications. From chatbots to intelligent assistants, LLMs are redefining how we interact with machines.

But here’s the catch: with so many tools at your disposal, how do you choose the right one for your project? That’s where Langchain and LiteLLM come into play. Both are popular frameworks in the AI community, but they serve very different needs. In this guide, we’re going to break down what makes each of them unique and, more importantly, how to determine which one is the best fit for you. Whether you’re looking to streamline your workflow or build complex, multi-step reasoning systems, this comparison will help you make an informed decision.

By the end of this post, you’ll walk away with a deep understanding of how Langchain and LiteLLM stack up against each other in terms of functionality, performance, and the types of problems they solve best.

2. What is Langchain?

You might be familiar with the saying, “To solve complex problems, you need complex tools.” Langchain embodies that philosophy.

Langchain is a powerful framework that allows you to chain together large language model (LLM) operations into sophisticated workflows. It’s like giving you a toolkit where each tool performs a specific task, but the real magic happens when you start combining them in creative ways. Imagine building an AI system that can answer questions by parsing documents, gathering external data, and making sense of it all—Langchain gives you that level of flexibility.

Core Features:

- Chainable Operations: You can link together multiple actions, such as extracting information from documents, reasoning over that information, and then generating responses.

- Modularity: Its plug-and-play nature means you can use different components for different stages of the process, customizing it to your needs.

- Integration with External Knowledge Sources: Whether it’s web data, databases, or other APIs, Langchain integrates seamlessly to bring in the data your LLM needs to make more informed decisions.

Target Audience: Langchain is a go-to framework for data scientists, AI researchers, and developers who are working on sophisticated projects. Think of workflows where multiple LLM tasks are chained together—like building an autonomous agent that can gather information from the web, interpret it, and generate a response. It’s perfect for those complex, multi-step reasoning tasks where each part of the system needs to work in harmony.

Current Use Cases: Let’s say you’re building a customer service chatbot that needs to not only answer questions but also pull up documents or search a knowledge base in real-time. Langchain excels here because it can integrate these various processes into one seamless workflow. Or maybe you’re working on a system for document parsing—you could use Langchain to break the document down, extract key insights, and then present the results in a user-friendly manner.

3. What is LiteLLM?

Now, let’s switch gears a bit. If Langchain is the heavy-duty machine for complex workflows, LiteLLM is the agile, sleek tool designed for efficiency and simplicity.

LiteLLM was built with one thing in mind: make it easier for developers to work with large language models without the overhead of a complex framework. It strips away the bells and whistles and focuses on delivering a lightweight solution for integrating LLMs into your applications. Think of it as the minimalist’s approach to LLM-powered projects.

Core Features:

- Lightweight Architecture: You won’t find complex chains or modules here. LiteLLM is designed to be efficient and easy to integrate, without the need for a lot of setup or extra dependencies.

- Ease of Integration: You’ll find it quick to plug LiteLLM into your existing systems, making it perfect for rapid deployment.

- Optimized for Performance: LiteLLM ensures that you can run your models with minimal resource overhead, making it a great choice for environments where resources are limited.

Target Audience: If you’re a developer or AI practitioner looking for a streamlined solution to integrate LLMs into your app without needing to learn a complex framework, LiteLLM is for you. It’s especially useful if your project doesn’t require the elaborate, multi-step workflows that Langchain enables. Instead, LiteLLM focuses on simplicity and performance—ideal for projects where you just need to get the job done, fast.

Current Use Cases: Imagine you’re building a web app with a limited infrastructure. LiteLLM can power your app’s AI features without draining your server’s resources. Another example could be a real-time, low-latency LLM application where speed is critical, and you don’t want the overhead that comes with heavier frameworks.

4. Key Comparison Factors

When you’re comparing tools like Langchain and LiteLLM, it’s crucial to focus on the key factors that directly affect how these platforms will perform in real-world projects. You and I both know that architecture, performance, flexibility, and integrations are make-or-break for any AI system. So let’s dive into these aspects and see where each tool stands.

Architecture & Design Philosophy

You might be wondering: What’s the core difference in how these two frameworks are built? Here’s the deal:

- Langchain is designed for those who need modularity and extensibility. Its architecture revolves around building complex workflows where different LLM tasks are chained together. Picture a machine with several cogs all working in sync—that’s Langchain. Its design makes it perfect for scenarios where you need to pull data from various sources, process it in different stages, and create complex reasoning systems. If you’re dealing with sophisticated, multi-step applications like document processing pipelines or autonomous agents, this framework shines.For example, if you need your AI to analyze a document, cross-reference it with external knowledge, and generate a summary, Langchain gives you the flexibility to orchestrate those tasks in a modular way.

- LiteLLM, on the other hand, embraces simplicity. Its architecture is streamlined, built for efficiency and ease of deployment. Imagine a Swiss Army knife with just the essentials—no extra frills. LiteLLM is ideal for projects that need quick integration with LLMs without a lot of overhead. It doesn’t have as many moving parts as Langchain, which makes it perfect when your goal is to get up and running fast without a steep learning curve.Think about it like this: If you’re building a chatbot for a small website or a lightweight AI-powered tool where simplicity and speed matter more than complex features, LiteLLM’s minimalist architecture makes perfect sense.

Performance & Efficiency

Now, let’s talk about performance. We’ve all been there—you’re deep into production, and suddenly your CPU or GPU starts hitting its limits. That’s why the efficiency of your chosen tool is so critical.

- Langchain tends to be more resource-intensive because of its modular design. With great power comes great responsibility, right? The flexibility that allows you to chain different operations and integrate multiple data sources can also lead to higher CPU/GPU usage, especially when handling large-scale or multi-step workflows. However, for many of you working on enterprise-grade applications where accuracy and complexity matter more than raw speed, the trade-off is usually worth it.In a production environment, Langchain might require more resources to scale, especially when working with large datasets or complex workflows that involve multiple LLM calls.

- LiteLLM, by contrast, is optimized for efficiency. It’s designed to run with minimal overhead, making it ideal for projects where resource constraints are a concern. You’ll find it consumes less memory and CPU/GPU resources, which makes it a better fit for low-latency applications where speed and performance are key.For instance, if you’re deploying an AI tool in an environment with limited computational power, LiteLLM’s lightweight design will ensure that your application doesn’t suffer from performance bottlenecks.

Flexibility & Modularity

This might surprise you: Langchain wins hands down in terms of flexibility. Its modular nature allows you to create custom chains of actions, and it’s fully extensible. This means you can adjust workflows, add new components, and experiment with different processes easily. If you’re someone who loves to tweak and customize every part of your AI system, Langchain gives you the freedom to do just that.

- You can break tasks into smaller parts and execute them in a logical sequence. For example, first, gather information from an API, then process it using an LLM, and finally feed the output into a database or other services. This flexibility is what makes Langchain a favorite for developers who are building complex applications with multiple moving parts.

On the flip side, LiteLLM is all about being streamlined. Its philosophy is more about getting things done with fewer steps and less customization. If you’re building an application where simplicity is your top priority—let’s say, a web service that needs to be deployed quickly—LiteLLM’s approach will suit you better. It’s designed to be easy to use, with fewer components to manage, meaning you can focus on your application rather than the underlying architecture.

Integration with External Tools

Here’s the thing: when you’re working with AI, integration is everything.

- Langchain is known for its ability to integrate with a wide range of third-party services, APIs, and external tools. Whether you’re pulling data from web APIs, integrating with external LLM models, or connecting to knowledge bases, Langchain can handle it. It’s especially useful in enterprise environments where you need to combine LLMs with other data sources and processing tools. You can think of Langchain as a hub for all your AI needs, capable of pulling in data from various places and combining it into a unified workflow.

- LiteLLM, on the other hand, keeps things simple. It integrates with basic LLM services and has fewer third-party integrations compared to Langchain. If you need to work within a more closed environment or have limited external data sources, LiteLLM’s simplicity can be a benefit. However, if your project requires heavy external integration, you might find it lacking compared to Langchain.

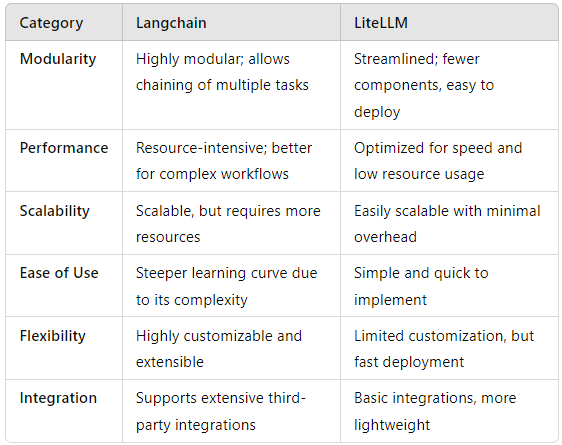

5. Comparison Table

Now, let’s break it down even further with a quick comparison table:

This table will help you (and your readers) see at a glance where each framework excels and where it might fall short depending on your needs. It’s all about matching the tool to the task, and with Langchain and LiteLLM, you’ve got two strong options, each designed for different challenges.

By focusing on these key comparison factors, you’ll be well-equipped to choose the framework that fits your specific project, whether it’s building a complex, multi-step AI system or deploying a lightweight, efficient LLM-powered application.

6. Best Use Cases for Langchain

Langchain really shines when you’re working on projects that require multi-step reasoning or customized LLM pipelines. Think of it as a tool designed for those intricate tasks where each step is dependent on another. The beauty of Langchain is in its ability to link together various LLM actions into a seamless chain, which makes it ideal for more complex workflows.

Here’s the deal: if your project needs to connect multiple data sources, run reasoning processes, and maybe even involve external knowledge, Langchain is the tool for you. Imagine you’re building an AI-powered virtual assistant. Not only does this assistant need to interpret a user’s question, but it also needs to fetch relevant data from external databases, reason through multiple sources, and then generate a response. That’s where Langchain’s modularity comes in.

Example #1: Context-Aware AI Agents

Say you’re developing an AI agent that can dynamically respond to different contexts—whether it’s financial data, customer service queries, or technical support. The agent might need to reference external APIs, sift through past conversation histories, and provide a reasoned response based on context. Langchain’s ability to chain different actions (like querying external data sources and reasoning over that data) makes it perfect for this.

Example #2: Knowledge-Based Chatbots

Think about creating a chatbot that isn’t just answering simple queries but is also tapping into various knowledge bases to give well-informed responses. Langchain can chain LLM actions like extracting information from a knowledge base, comparing it with user queries, and then presenting the best possible answer—all in real time.

7. Best Use Cases for LiteLLM

Now, let’s talk about LiteLLM. This tool excels in environments where simplicity and efficiency are the top priorities. You’re not building something deeply complex here; instead, you want to deploy an LLM quickly, without worrying about overloading your servers or dealing with complex configurations.

Picture this: you’re building a simple web app, and you need some basic LLM-powered functionality, like natural language processing for user queries or simple text generation. LiteLLM is your go-to tool because it’s lightweight and super easy to deploy. It won’t demand high-end hardware or complex setups, which is crucial if you’re running an app in a resource-limited environment.

Example #1: Simple Web Apps

Let’s say you’re developing a customer feedback app that collects user responses and generates summaries or insights. LiteLLM allows you to integrate an LLM without the overhead that comes with more complex systems. You don’t need multi-step processing here; just quick, low-latency LLM responses that fit well within a small-scale system.

Example #2: Fast API Integrations

You’ve got a web service that needs to interact with an LLM for text generation—like a marketing app that auto-generates headlines based on product descriptions. LiteLLM makes it simple to add LLM functionality quickly without sacrificing performance. This is perfect for those cases where speed and resource efficiency are key.

10. When to Choose Langchain

So, when should you go all-in on Langchain? You want to use Langchain when your project needs custom workflows, complex data integration, or multi-step reasoning. It’s like choosing a highly specialized tool for a very specific job. If your project involves multiple steps, such as extracting, transforming, and reasoning over data before generating a final result, Langchain is your best bet.

Let’s say you’re developing a tool for legal document analysis. You need to scan documents, extract relevant clauses, cross-reference those with legal databases, and then generate a summary or recommendation. Langchain is perfect here because it can handle each of these tasks as separate, chainable components. You get the flexibility to customize how each step interacts with the next, ensuring the system works exactly how you need it.

Also, if your project requires integrating with external knowledge sources, like APIs, databases, or even third-party LLMs, Langchain’s wide support for integrations makes it an excellent choice. This extensibility is crucial in enterprise settings where your application might need to interact with other systems or pull data from multiple sources.

8. When to Choose LiteLLM

On the flip side, LiteLLM is the go-to choice when you need something up and running fast and don’t require complex operations. You’ll love LiteLLM if you’re focused on speedy deployment and minimal configuration—especially if your project is constrained by resources or you just don’t need the level of complexity that Langchain offers.

For example, if you’re launching a content generation platform that automates social media post creation based on user inputs, LiteLLM is ideal. You just need basic LLM functionality—no multi-step reasoning or complex data manipulation. LiteLLM is optimized for projects where simplicity and speed matter more than flexibility.

Another scenario where LiteLLM shines is in low-latency applications. Maybe you’re building a real-time customer support tool that needs to quickly process incoming text and return relevant responses without lag. LiteLLM’s lean architecture ensures that your system stays responsive, even in environments with limited computational power.

Conclusion

So, where does that leave us?

Choosing between Langchain and LiteLLM really boils down to understanding the complexity of your project. If you need flexibility, multi-step reasoning, or integration with external knowledge sources, Langchain is the obvious choice. But if you’re more concerned with simplicity, quick deployment, and resource efficiency, then LiteLLM will be your best friend.

In short:

- Use Langchain for projects where customization and complexity rule the day.

- Use LiteLLM for simpler applications that demand speed, efficiency, and ease of use.

By now, you should have a clear understanding of where each of these tools fits into your AI toolkit. The decision comes down to your specific needs—do you want to build something complex, or do you want to get something out the door fast? Either way, both Langchain and LiteLLM offer powerful ways to integrate LLMs into your projects.